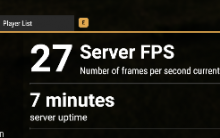

When the -maxFPS limit is applied to a Linux dedicated server, and the server is traced using the linux strace tool, it can be observed that the game logic is implementing this delay in way that very likely impacts performance in quite a negative way.

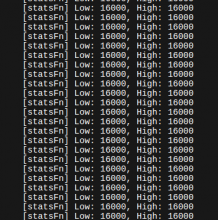

The trace shows that clock_nanosleep(CLOCK_REALTIME, 0, {tv_sec=0, tv_nsec=16000000}) is called on each iteration of the game loop. Using gdb and putting a breakpoint on this call shows that the unix function usleep is being called with the fixed value of 16000.

As the game logic may take time to complete, using a fixed interval here is going to cause a loaded down busy server to artificially wait longer then it should. For example, if the game logic takes 8ms to complete, this will result in a 8+16ms until the next frame, resulting in 41.6FPS. The more time spent in the game logic the worse this becomes.

The obvious but still not correct solution here would be to time how long the game logic took and account for this, however there is a much neater solution under linux. clock_nanosleep supports absolute time values. By getting the current monotonic time from the system and adding 16ms to it on each iteration, you can use clock_nanosleep with the TIMER_ABSTIME flag to schedule when the next wakeup will occur. If the next wakeup is a time in the past because the loop iteration was too slow and the game has fallen behind, it skips the sleep entirely.

I would assume that the windows build suffers from the same issue, though the solution there would be differnt.

As a proof of concept I have implemented a small library to hook usleep and change this behaviour to use instead a running clock and directly call clock_nanosleep with the absolute time rather then a relative time (source below).

#define _GNU_SOURCE

#include <dlfcn.h>

#include <time.h>

#include <stdio.h>

#include <string.h>

#include <errno.h>

#include <sys/time.h>

#include <unistd.h>

#include <stdint.h>

#define FRAME_DURATION_NS 16000000L

static int (*real_usleep)(useconds_t usec) = NULL;

static struct timespec nextTime = { 0 };

__attribute__((constructor))

static void init_hook(void)

{

real_usleep = (int (*)(useconds_t usec))dlsym(RTLD_NEXT, "usleep");

if (!real_usleep)

{

fprintf(stderr, "[mysleep] Error: could not find real usleep: %s\n",

dlerror());

}

}

static inline uint32_t __iter_div_u64_rem(uint64_t dividend, uint32_t divisor, uint64_t *remainder)

{

uint32_t ret = 0;

while (dividend >= divisor)

{

/* The following asm() prevents the compiler from

optimising this loop into a modulo operation. */

asm("" : "+rm"(dividend));

dividend -= divisor;

ret++;

}

*remainder = dividend;

return ret;

}

static inline void tsAdd(struct timespec * a, uint64_t ns)

{

a->tv_sec += __iter_div_u64_rem(a->tv_nsec + ns, 1000000000L, &ns);

a->tv_nsec = ns;

}

inline static int fpsSleep()

{

// if it's the very first time we are called

if (nextTime.tv_sec == 0 && nextTime.tv_nsec == 0)

{

// initialize the time and add the delay to it

clock_gettime(CLOCK_MONOTONIC, &nextTime);

tsAdd(&nextTime, FRAME_DURATION_NS);

}

struct timespec remain;

int ret = 0;

for(;;)

{

ret = clock_nanosleep(CLOCK_MONOTONIC, TIMER_ABSTIME, &nextTime, NULL);

if (ret == 0 || ret != EINTR)

break;

}

// add the delay to the running clock

tsAdd(&nextTime, FRAME_DURATION_NS);

return ret;

}

int usleep(useconds_t usec)

{

// assume sleeps of the duration are our target to hook

if (usec == FRAME_DURATION_NS / 1000L)

return fpsSleep();

return real_usleep(usec);

}This can be compiled with the command:

gcc -fPIC -shared -o libmysleep.so mysleep.c -ldl -O3

And injected into the game using the LD_PRELOAD environment variable:

LD_PRELOAD=/absolute/path/to/libmysleep.so ./ArmaReforgerServer -maxFPS 60

As a result on a moderately loaded server that would normally start to see FPS drops, I am now seeing a rock solid 60FPS.

Please also note that this new logic uses the faster and guaranteed to be incremental CLOCK_MONOTONIC, as CLOCK_REALTIME can shift when the system time is adjusted by the user, or the ntp daemon.